Topic

Date

Location

Text

Abstract:

We assess syntactic and semantic issues of providing information to large applications in terms of integration and interoperation levels for technologies ranging from centralized and federated databases, through warehousing, mediation, to knowledge repositories. We draw conclusions for effective architectures, community acceptance, and research issues to be addressed.

The success of database technologies and the Internet has lead to an explosion of available information. For any given purposes we can data about every topic, from many points of view, and from a wide variety of sources. There is greatly increased value to assimilating the data together, since the relationships among previously disjoint data provides novel insights and hence information that was not available before. Early database concepts to support such an assimilation were based on having large, centralized databases, and pushing all data to such a central repository. Scalability issues led to concepts of federated databases, which shared a schema and enabled distributed search. Integration of data was achieved through interoperation at the level of communication technology. The results mirrored the sources exactly, and any semantic relationships or mismatches had to be handled by the applications.

Current warehouse technology is a direct descendant of that direction, but now formalizing the central database as an integrated copy, reducing the need for concurrent interoperation. Warehouses enabled transformations of the source to make it easier to search and drill down within the accumulated data. From the point-of -view of a using application, having all possibly interesting data instantly available and together is very attractive. The low cost of storage motivates such collections, although performance remains an issue, since access times of disk storage have not increased as much. The major cost of this approach is in keeping the warehouse up-to-date. For data sources that change frequently the cost of shipping incremental updates to the warehouse and inserting it correctly is high, and read-to-write ratios for large data collections will be low as well, reducing the benefit/cost ratio. Since sources may differ in semantics, the required global resolution of their differences is also an ongoing task.

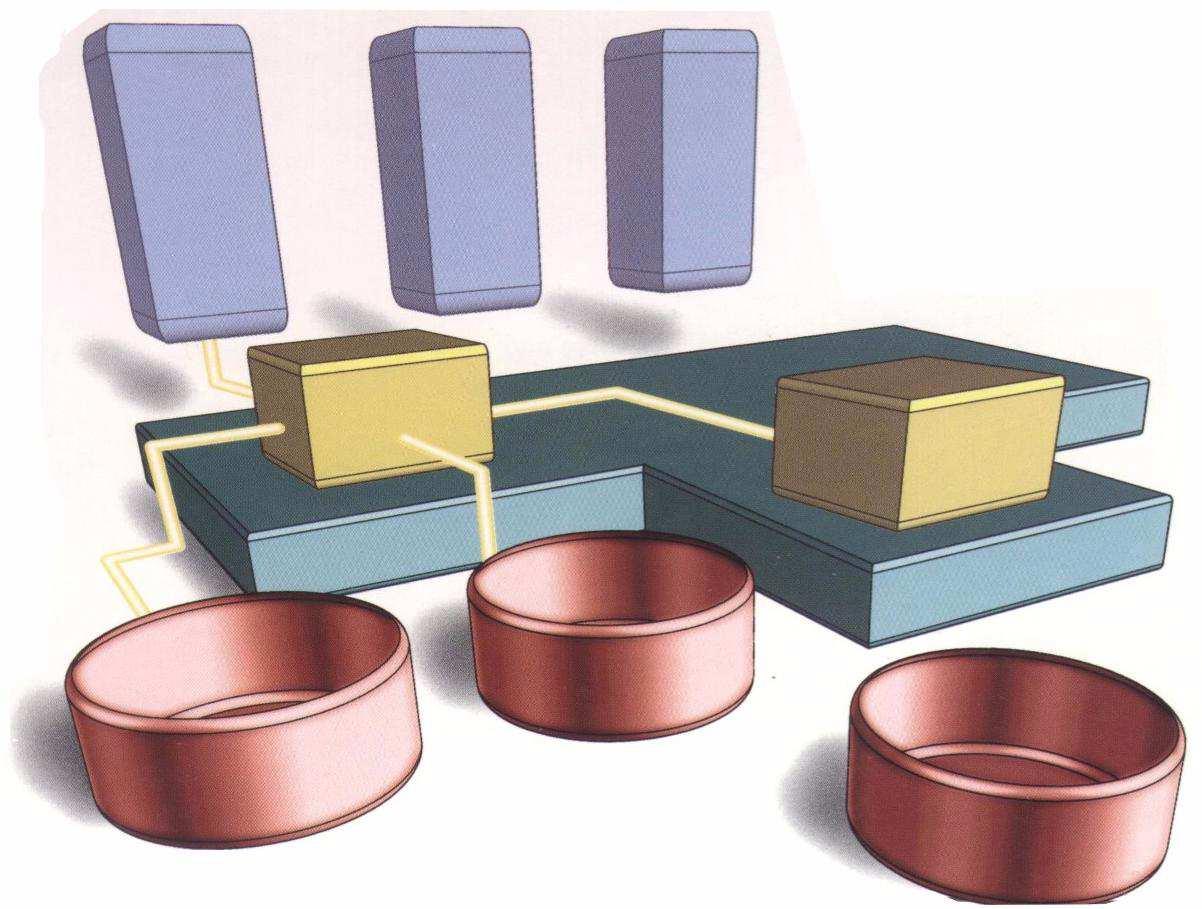

Moving to information integration through interoperation among data sources is an alternate approach to deal with excessive data volumes to obtain information. Integration of data is provided in servers. Only descriptive information and processing knowledge is stored in the servers. Applications are expected to deal with higher level concepts and delegate selection and summarization. The concept of intelligent mediators hypothesizes that the required knowledge is domain specific, and can reside neither in the sources, nor be effectively delegated to the individual applications. Since abstractions and summaries change slower than the data collected in the sources, the transmission requirements between the mediators and the applications is less. The mediated architecture was predicated on using queries, both from the applications that required information and from the mediators, that required data from the sources. Integration occurs at two levels: a domain-specific mediator must integrate data from multiple sources, using shared concepts and metrics, while applications may access various mediators to obtain information from multiple domains that are semantically disjoint. The portions of a system must interoperate effectively since the linkages are dynamic. Caching may reduce the load for data transfer about active topics. Having substantial and persistent information caches leads towards new architectures.

Knowledge repositories are a technology not yet well formalized today, to combine information interoperation with integration. A knowledge repository may use subscribe technology to obtain data, but let the applications obtain data via queries, or pull technology. In the model we foresee performance can be higher, since applications only need to pull integrated information from the repositories, while the sources publish and push information to the repositories. A higher level of intelligence, or prescience is needed, to define the content needed in such repositories. The sources should be dynamically accessible so that results can be questioned and validated. The scale of systems can be increased, since the transmission rates and connectivity demands are reduces with respect to the sources, and interoperability can focus on repository access. Resolution of semantic differences is performed when information is placed within the knowledge repositories.

The discussion up this point have used a three-level architectural model, although in any of the alternatives more levels have been contemplated and occasionally implemented. The high value and complexity of establishing routine linkages among related, but autonomous services might require a layer that is distinct in style from repository services that are best when focusing on coherent domains.

Any increase in levels requires great care. The operational complexity, the likelihood of needing different standards for performance and representation among levels, induces costs and risks that may be high. The business models also become more difficult, since services as mediation and knowledge repositories require expert maintenance, who must be compensated. The lack of simple, incremental payment schemes on the Internet disables the initiation of information service providers. On the other hand, the acceptance of representation standards such as XML, the availability of transport middleware from many sources, and the expectations of the user community will provide motivations for progress in information integration and interoperation.

Gio Wiederhold:

Gio Wiederhold is an emeritus professor of Computer Science, Electrical Engineering, and Medicine at Stanford University, continuing part-time with ongoing projects and students. Since 1976 he has supervised 31 PhD theses in these departments. Current research topics in large-scale information systems include composition, access to simulations to augment decision-making capabilities, developing an algebra over ontologies and privacy protection in collaborative settings.

Wiederhold has authored and co-authored more than 350 publications and reports on computing and medicine, including an early popular Database Design textbook. He initiated knowledge-base research through a white paper to DARPA in 1977, combining databases and Artificial Intelligence technology. The results led eventually to the concept of mediator architectures.

Wiederhold was born in Italy, received a degree in Aeronautical Engineering in Holland in 1957 and a PhD in Medical Information Science from the University of California at San Francisco in 1976. Prior to his academic career he spent 16 years in the software industry. His career followed computer technologies, starting with numerical analysis applied to rocket fuel, FORTRAN and PL/1 compilers, real-time data acquisition, a time-oriented database system, eventually becoming a corporate software architect.

He has been elected fellow of the ACMI, the IEEE, and the ACM. He spent 1991-1994 as the program manager for Knowledge-based Systems at DARPA in Washington DC. He has been an editor and editor-in-chief of several IEEE and ACM publications. Gios web page is http://www-db.stanford.edu/people/gio.html.